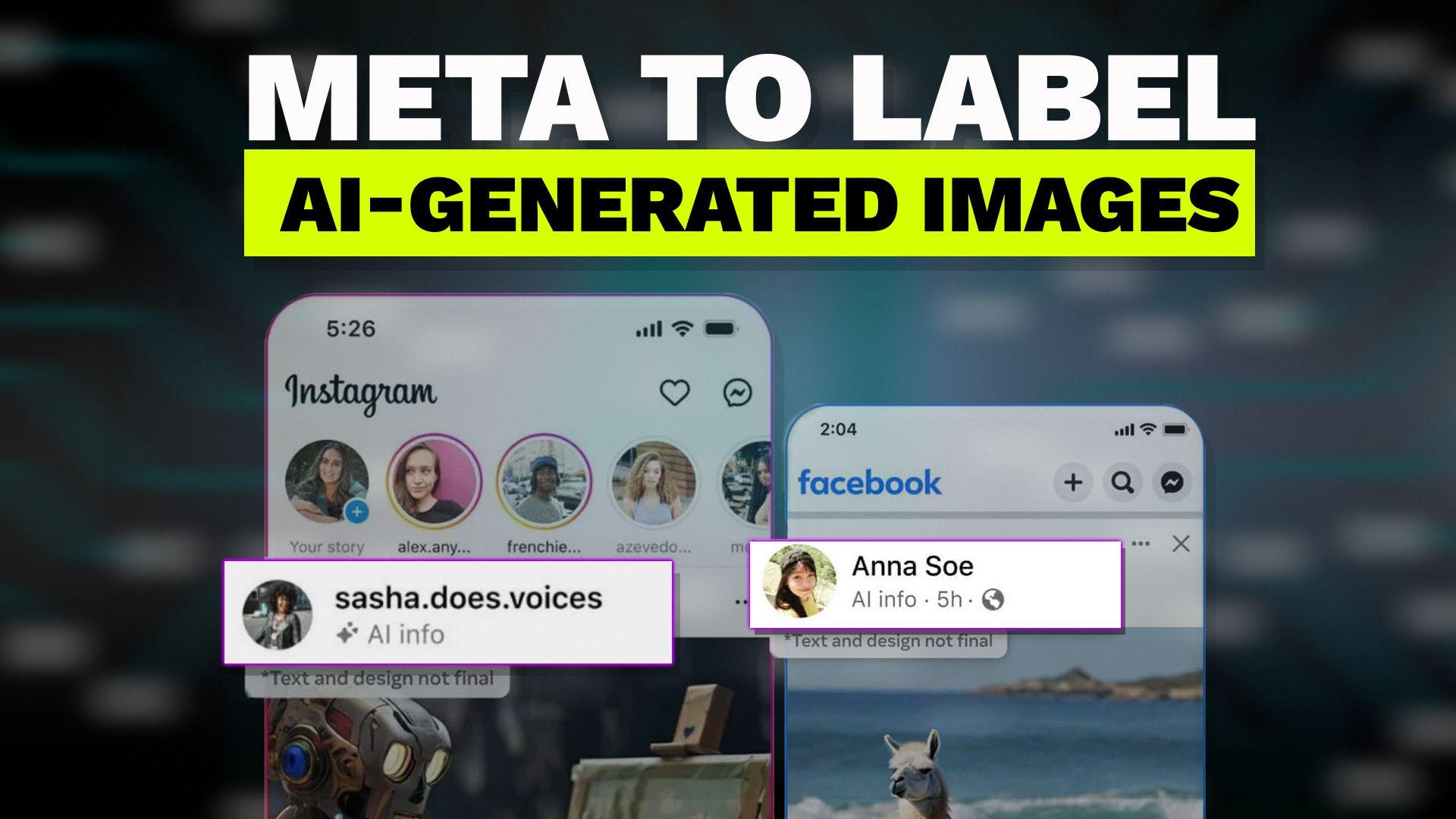

Facebook and Instagram Introduce Labels for AI-Generated Content

In a bid to tackle the proliferation of AI-generated content, Facebook and Instagram are rolling out a groundbreaking labeling system. As users scroll through their social media feeds, they'll now encounter conspicuous labels identifying AI-generated images. This move comes as part of a broader effort within the tech industry to combat the dissemination of misleading or harmful content.

The initiative, spearheaded by Meta in collaboration with industry partners, aims to establish technical standards for recognizing AI-generated media across various formats, including images, videos, and audio. By implementing these labels, the social media giants seek to provide users with greater transparency and enable them to discern between authentic and AI-generated content.

With the rapid advancements in AI technology, distinguishing between real and synthetic content has become increasingly challenging. From deepfake videos to computer-generated imagery (CGI), the digital landscape is rife with content that blurs the lines of reality. Such content not only poses risks to individual users but also undermines the integrity of online discourse and public trust.

By introducing labels for AI-generated content, Facebook and Instagram are taking proactive steps to mitigate the potential harms associated with misinformation and deceptive media. However, the effectiveness of this labeling system remains uncertain. While it may serve as a helpful tool in alerting users to the presence of AI-generated content, its impact on curbing the spread of such content remains to be seen.

Critics argue that labeling alone may not suffice in addressing the underlying challenges posed by AI-generated media. In addition to labeling, robust measures for content moderation and detection are imperative to safeguarding online platforms against manipulation and exploitation.

If you found this video engaging, click here to start creating compelling videos using our seamless no-edit video creation platform.